How Effective Altruism Lost Its Way

It is time for the EA movement to rediscover humanism.

I. The Quest to Maximize Human Well-Being

A decade and a half ago, the founders of two small Oxford-based nonprofits couldn’t have anticipated that they were launching one of the most significant philanthropic movements in a generation. Giving What We Can was created in 2009 to help people identify the most effective charities and commit to donating a substantial portion of their income. Two years later, 80,000 Hours—a reference to the average amount of time people spend on their careers throughout their working lives—was founded to explore which careers have the maximum positive impact. In October 2011, Will MacAskill (the co-founder of both organizations who was then working toward his philosophy PhD at Oxford) emailed the 80,000 Hours team: “We need a name for ‘someone who pursues a high impact lifestyle,’” he wrote. “‘Do-gooder’ is the current term, and it sucks.”

MacAskill would later explain that his team was “just starting to realize the importance of good marketing, and [was] therefore willing to put more time into things like choice of name.” He and over a dozen other do-gooders set out to choose a name that would encompass all the elements of their movement to direct people toward high-impact lives. What followed was a “period of brainstorming—combining different terms like ‘effective’, ‘efficient’, ‘rational’ with ‘altruism’, ‘benevolence’, ‘charity’.” After two months of internal polling and debate, there were 15 final options, including the Alliance for Rational Compassion, Effective Utilitarian Community, and Big Visions Network. The voters went with the Center for Effective Altruism.

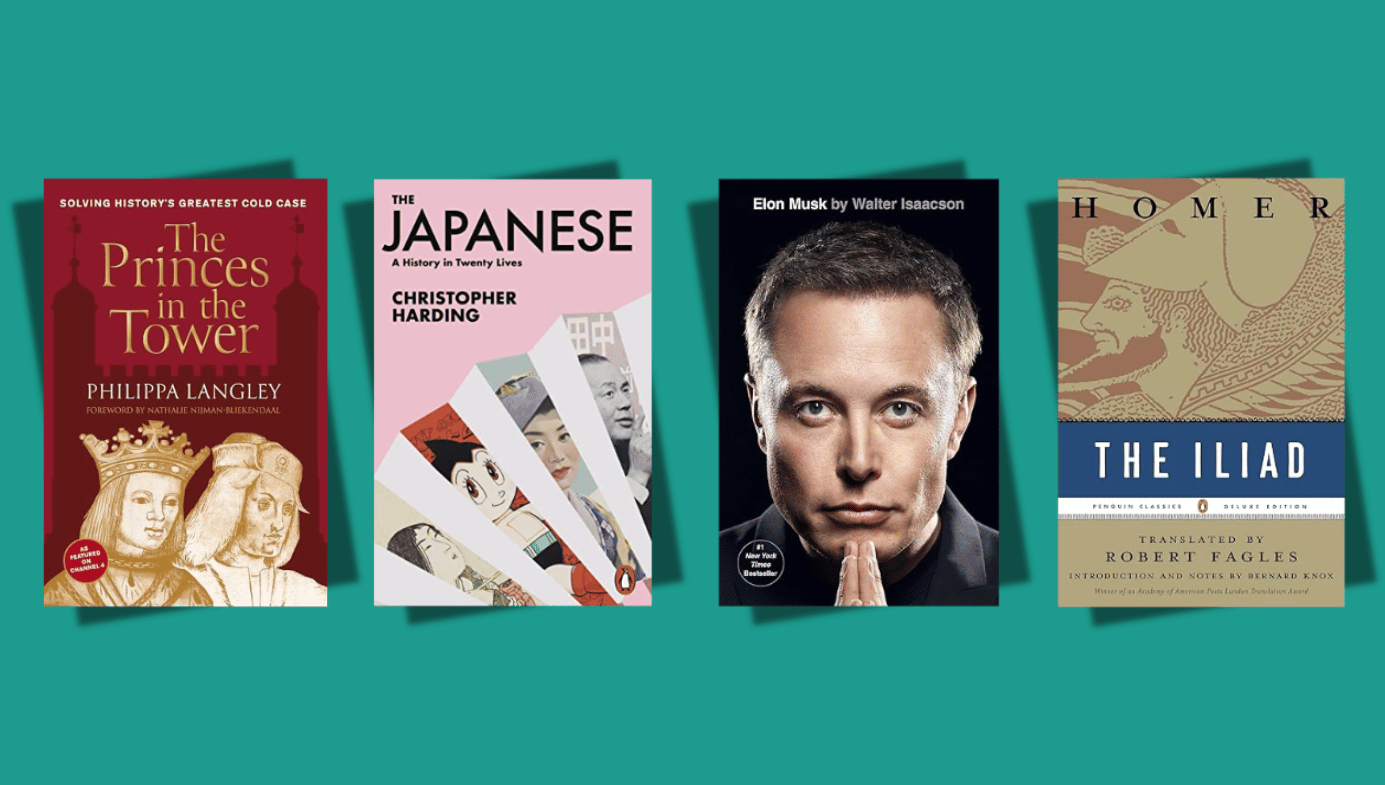

Over the past decade, Effective Altruism (EA) has grown from a small project led by Oxford academics (MacAskill co-founded Giving What We Can with his fellow Oxford philosopher, Toby Ord) to one of the largest philanthropic movements in the world. EA has attracted the attention of a wide and diverse array of influential people—from the philosopher Derek Parfit to Elon Musk—and the movement has directed billions of dollars toward causes such as global health and poverty, biosecurity, and animal welfare. EA has also made plenty of enemies, who have variously described the movement as a “Trojan horse for the vested interests of a select few,” dismissed it as an “austerely consequentialist” worldview “beloved of robotic tech bros everywhere with spare millions and allegedly twinging consciences,” and even accused it of providing “ideological cover for racism and sexism.”

The starting point for understanding EA is the first word in its name. The central problem identified by the movement’s founders is the lack of evidence behind many forms of charitable giving. EAs believe rigorous cost-benefit analyses should determine which causes and organizations are capable of using resources most effectively. While it may feel good to donate to a local soup kitchen or an animal shelter, EAs maintain that these charities don’t have nearly as much impact on human well-being as, say, a foundation which provides malaria-resistant bed nets in Sub-Saharan Africa.

While EA isn’t exclusively utilitarian, the greatest good for the greatest number is a rough approximation of the basic principle that many in the movement endorse. The utilitarian philosopher Peter Singer is one of the intellectual godfathers of EA, as his emphasis on applied ethics and an impartial sense of moral responsibility aligns with EA’s focus on objectively maximizing the good that can be done in the world. In a 1972 essay titled “Famine, Affluence, and Morality,” Singer presented a fundamental challenge to how most people view the parameters of moral responsibility. He wrote it amid the refugee crisis and mass starvation created by the Bangladesh Liberation War (along with the lingering aftermath of a devastating cyclone), and he observed that the citizens of wealthy countries had the collective resources to save many lives in the region with relatively modest financial contributions.

Singer’s essay presented a now-famous thought experiment: “If I am walking past a shallow pond and see a child drowning in it, I ought to wade in and pull the child out. This will mean getting my clothes muddy, but this is insignificant, while the death of the child would presumably be a very bad thing.” Singer says this scenario isn’t all that different from the situation that confronts us every day—we’re well aware of the immense suffering in the world that could be improved without “sacrificing anything of comparable moral importance,” yet we fail to act. While there’s a clear psychological difference between allowing a child to drown right in front of you and failing to donate a lifesaving amount to an effective global nonprofit, the end result of each choice is the same.

Singer often introduces other variables to sharpen his point—imagine you’re wearing expensive shoes or pants that will be ruined in the pond. As Singer, MacAskill, and many EAs observe, it doesn’t cost much to dramatically improve (or even save) a life. We live in a world where people lack access to clean drinking water, shelter, and basic medical treatment—the current cost of an anti-malarial bed net is around $2, while it costs a little over $1 to provide vitamin A supplementation that can prevent infection, blindness, and death. Even if you were wearing an expensive watch or piece of jewelry, imagine the moral opprobrium that would await you if this was the reason you stood on the shore and allowed a child to drown. We fail to incur more modest costs to help the global poor every day—GiveWell (a major EA-affiliated organization that assesses the effectiveness of nonprofits and facilitates donations) estimates that a $5,000 donation to the Malaria Consortium could save a life.

The moral and logical force of “Famine, Affluence, and Morality” would eventually win converts around the world. MacAskill read it when he was 18, and it set him on a path toward EA. One of the revelations that attracts people to EA is just how effective charitable giving can be, which is the theme of MacAskill’s 2015 book Doing Good Better. It’s easy to be cynical about philanthropy when you read about multibillion-dollar donations to already-rich elite schools, the millions of dollars wasted on trendy but failed interventions, and most nonprofits’ inability to provide clear evidence of their performance. Unlike major philanthropists like MacKenzie Scott, who provide billions of dollars to hundreds of favored nonprofits with next to no demand for accountability, GiveWell-approved organizations must be capable of demonstrating that their programs actually work.

There’s a reason GiveWell’s current top recommended charities are all focused on global health—this is a neglected area where low-cost interventions can have a massive impact. It costs about 180 times less to save a life in the world’s poorest countries, where nearly 700 million people live on less than $2.15 per day, than it costs in the United States or the UK.

This reflects another essential principle of EA: universalism. A decade after he wrote “Famine, Affluence, and Morality,” Singer published The Expanding Circle (1981), in which he examines the evolutionary origins and logic of ethics. Our altruistic impulses were once limited to our families and tribes, as cooperation on this scale helped early human beings survive and propagate their genes. However, this small-scale reciprocal altruism has steadily grown into a sense of ethical obligation toward larger and larger communities, from the tribe to the city-state to the nation to the species. For Singer and many EAs, this obligation extends beyond the species to non-human animals. EAs try to take what the utilitarian philosopher Henry Sidgwick (a major influence on Singer) described as the “point of view of the universe,” which means looking beyond tribal loyalties and making objective ethical commitments.

There’s overwhelming evidence for the effectiveness of EA’s efforts in many fields. EA can plausibly claim to have contributed to a significant reduction in malaria infections and deaths, the large-scale treatment of chronic parasites such as schistosomiasis, a significant increase in childhood routine vaccinations, an influx of direct cash transfers to the global poor, and much more (for a summary of EA’s accomplishments, see this post on Astral Codex Ten). EA has also invested in overlooked fields such as pandemic preparedness and biodefense. EA’s animal welfare advocacy has supported successful regulations that prohibit cruel forms of confinement; it has funded research into alternative proteins and other innovations that could reduce animal suffering (like in ovo sexing to prevent the slaughter of male chicks); and it has generally improved conditions for factory farmed animals around the world. GiveWell has transferred over $1 billion to effective charities, while Giving What We Can has over $3.3 billion in pledged donations.

By focusing on highly effective and evidence-based programs which address widely neglected problems, EA has had a positive impact on a vast scale. Despite this record—and at a time when EA commands more resources than ever—the movement is in the process of a sweeping intellectual and programmatic transformation. Causes that once seemed ethically urgent have been supplanted by new fixations: the existential threat posed by AI, the need to prepare humankind for a long list of other apocalyptic scenarios (including plans to rebuild civilization from the ground up should the need arise), and the desire to preserve human consciousness for millions of years, perhaps even shepherding the next phase of human evolution. Forget The Life You Can Save (the title of Singer’s 2009 book which argues for greater efforts to alleviate global poverty)—many EAs are now more focused on the species they can save.

In one sense, this shift reflects the utilitarian underpinnings of the movement. If there’s even the slightest possibility that superintelligent AI will annihilate or enslave us, preventing that outcome offers more expected utility than all the bed nets and vitamin A supplements in the world. The same applies to any other existential threat, especially when you factor in all future human beings (and maybe even “posthumans”). This is what’s known as “longtermism.”

The problem with these grand ambitions—saving humanity from extinction and enabling our species to reach its full potential millions of years from now—is that they ostensibly justify any cost in the present. Diverting attention and resources from global health and poverty is an enormous gamble, as it will make many lives poorer, sicker, and shorter in the name of fending off threats that may or may not materialize. But many EAs will tell you that even vanishingly small probabilities and immense costs are acceptable when we’re talking about the end of the world or hundreds of trillions of posthumans inhabiting the far reaches of the universe.

This is a worldview that’s uniquely susceptible to hubris, dogma, and motivated reasoning. Once you’ve decided that it’s your job to save humanity—and that making huge investments in, say, AI safety is the way to do it—fanaticism isn’t just a risk, it’s practically obligatory. This is particularly true given the pace of technological development. When our AI overlords could be arriving any minute, is another child vaccination campaign really what the world needs? Unlike other interventions EA has sponsored, there are scant metrics for tracking the success or failure of investments in existential risk mitigation. Those soliciting and authorizing such investments can’t be held accountable, which means they can continue telling themselves that what they’re doing is quite possibly the most important work that has ever been undertaken in the history of the species, even if it’s actually just an exalted waste of time and money.

II. The Turn Towards Longtermism

The EA website notes that the movement is “based on simple ideas—that we should treat people equally and it’s better to help more people than fewer—but it leads to an unconventional and ever-evolving picture of doing good.” In recent years, this evolution has oriented the movement toward esoteric causes like preventing AI armageddon and protecting the interests of voiceless unborn trillions. While there are interesting theoretical arguments for these causes, there’s a disconnect between an almost-neurotic focus on hard evidence of effectiveness in some areas of EA and a willingness to accept extremely abstract and conjectural “evidence” in others.

The charity evaluator GiveWell has long been associated with EA. Its founders Holden Karnofsky and Elie Hassenfeld are both EAs (Karnofsky also co-founded the EA-affiliated organization Open Philanthropy and Hassenfeld manages the EA Global Health and Development Fund), and the movement is a major backer of GiveWell’s work. It would be difficult to find an organization that is more committed to rigorously assessing the effectiveness of charities, from the real-world impact of programs to how well organizations can process and deploy new donations. GiveWell currently recommends only four top charities: the Malaria Consortium, the Against Malaria Foundation, Helen Keller International, and New Incentives.

GiveWell notes that its criteria for qualifying top charities are so stringent that they may prevent highly effective organizations from making its list, while many former top charities (such as Unlimit Health) continue to have a significant impact in their focus areas. But this problem is a testament to GiveWell’s strict commitment to maximizing donor impact. The organization has 37 full-time research staff who conduct approximately 50,000 hours of research annually. When I emailed GiveWell a question about its process for determining the costs of certain outcomes, I promptly received a long response explaining exactly which metrics are used, how they are weighted based on organizations’ target populations, and how researchers think about complex criteria such as outcomes which are “as good as” averting deaths.

GiveWell says that one downside to its methodology is the possibility that “seeking strong evidence and a straightforward, documented case for impact can be in tension with maximizing impact.” This observation captures a deep epistemic split within EA in the era of longtermism and existential anxiety.

In a 2016 essay published by Open Philanthropy, Karnofsky makes the case for a “hits-based” approach to philanthropy that is willing to tolerate high levels of risk to identify and support potentially high-reward causes and programs. Karnofsky’s essay offers an illuminating look at why many EAs are increasingly focused on abstruse causes like mitigating the risk of an AI apocalypse and exploring various forms of “civilizational recovery” such as resilient underground food production or fossil fuel storage, in the event that the species needs to reindustrialize in a hurry. This sort of thinking is common among EAs. Ord is a senior research fellow at Oxford’s Future of Humanity Institute, where his “current research is on avoiding the threat of human extinction,” while MacAskill’s focus on longtermism has made him increasingly concerned about existential risk.

According to Karnofsky, Open Philanthropy is “open to supporting work that is more than 90 percent likely to fail, as long as the overall expected value is high enough.” He argues that a few “enormous successes” could justify a much larger number of failed projects, which is why he notes that Open Philanthropy’s principles are “very different from those underlying our work on GiveWell.” He even observes that some projects will “have little in the way of clear evidential support,” but contends that philanthropists are “far less constrained by the need to make a profit or justify their work to a wide audience.”

While Karnofsky is admirably candid about the liabilities of Open Philanthropy’s approach, some of his admissions are unsettling. He explains that the close relationships between EAs who are focused on particular causes can lead to a “greatly elevated risk that we aren’t being objective, and aren’t weighing the available evidence and arguments reasonably.” But he says the risk of creating “intellectual bubbles” and “echo chambers” is worth taking for an organization focused on hits-based philanthropy: “When I picture the ideal philanthropic ‘hit,’” he writes, “it takes the form of supporting some extremely important idea, where we see potential while most of the world does not.” Throughout his essay, Karnofsky is careful to acknowledge risks such as groupthink, but his central point is that these risks are unavoidable if Open Philanthropy is ever going to score a “hit”—an investment that has a gigantic impact and justifies all the misses that preceded it.

Of course, it’s possible that a hit will never materialize. Maybe the effort to minimize AI risk (a core focus for Open Philanthropy) will save the species one day. On the other hand, it may turn out to be a huge waste of resources that could have been dedicated to saving and improving lives now. Open Philanthropy has already directed hundreds of millions in funding to mitigating AI risk, and this amount will likely rise considerably in the coming years. When Karnofsky says “one of our core values is our tolerance for philanthropic ‘risk,’” he no doubt recognizes that this risk goes beyond the possibility of throwing large sums of money at fruitless causes. As any EA will tell you, the opportunity cost of neglecting some causes in favor of others can be measured in lives.

In the early days of EA, the movement was guided by a powerful humanist ethos. By urging people to donate wherever it would do the most good—which almost always means poor countries that lack the basic infrastructure to provide even rudimentary healthcare and social services—EAs demonstrated a real commitment to universalism and equality. While inequality has become a political obsession in Western democracies, the most egregious forms of inequality are global. It’s encouraging that such a large-scale philanthropic and social movement urges people to look beyond their own borders and tribal impulses when it comes to doing good.

Strangely enough, as EA becomes more absorbed in the task of saving humanity, the movement is becoming less humanistic. Taking the point of view of the universe could mean prioritizing human flourishing without prejudice, or it could mean dismissing human suffering as a matter of indifference in the grand sweep of history. Nick Bostrom is a Swedish philosopher who directs Oxford’s Future of Humanity Institute and a key figure in the longtermism movement (as well as a prominent voice on AI safety). In 2002, Bostrom published a paper on “human extinction scenarios,” which calls for a “better understanding of the transition dynamics from a human to a ‘posthuman’ society” and captures what would later become a central assumption of longtermism. When set against the specter of existential risk, Bostrom argues that other calamities are relatively insignificant:

Our intuitions and coping strategies have been shaped by our long experience with risks such as dangerous animals, hostile individuals or tribes, poisonous foods, automobile accidents, Chernobyl, Bhopal, volcano eruptions, earthquakes, draughts, World War I, World War II, epidemics of influenza, smallpox, black plague, and AIDS. These types of disasters have occurred many times and our cultural attitudes towards risk have been shaped by trial-and-error in managing such hazards. But tragic as such events are to the people immediately affected, in the big picture of things—from the perspective of humankind as a whole—even the worst of these catastrophes are mere ripples on the surface of the great sea of life.

According to Bostrom, none of the horrors he listed has “significantly affected the total amount of human suffering or happiness or determined the long-term fate of our species.” Aside from the callous way Bostrom chose to make his point, it’s strange that he doesn’t think that World War II—which accelerated the development of nuclear weapons—could possibly determine the long-term fate of our species. While many longtermists like Elon Musk insist that AI is more dangerous than nuclear weapons, it would be an understatement to say that the available evidence (i.e., the long history of brushes with nuclear war) testifies against that view. Given that many longtermists are also technologists who are enamored with AI, it’s unsurprising that they elevate the risk posed by the technology above all others.

Eliezer Yudkowsky is one of the most influential advocates of AI safety, particularly among EAs (his rationalist “LessWrong” community embraced the movement early on). In a March 2023 article, Yudkowsky argued that the “most likely result of building a superhumanly smart AI, under anything remotely like the current circumstances, is that literally everyone on Earth will die.” The rest of the piece is a torrent of hoarse alarmism that climaxes with a bizarre demand to make AI safety the central geopolitical and strategic priority of our time. After a dozen variations of “we’re all going to die,” Yudkowsky declares that countries must “Make it explicit in international diplomacy that preventing AI extinction scenarios is considered a priority above preventing a full nuclear exchange, and that allied nuclear countries are willing to run some risk of nuclear exchange if that’s what it takes to reduce the risk of large AI training runs.” The willingness to toy with a known existential threat to fend off a theoretical existential threat is the height of longtermist hubris.

In a 2003 paper titled “Astronomical Waste: The Opportunity Cost of Delayed Technological Development,” Bostrom argues that sufficiently advanced technology could sustain a huge profusion of human lives in the “accessible region of the universe.” Like MacAskill, Bostrom suggests that the total number of humans alive today plus those who came before us account for a miniscule fraction of all possible lives. “Even with the most conservative estimate,” he writes, “assuming a biological implementation of all persons, the potential for one hundred trillion potential human beings is lost for every second of postponement of colonization of our supercluster.” It’s no wonder that Bostrom regards all the wars and plagues that have ever befallen our species as “mere ripples on the surface of the great sea of life.”

Given the title of Bostrom’s paper, it is tempting to assume that he was more sanguine about the risks posed by new technology two decades ago. But this isn’t the case. He argued that the “lesson for utilitarians is not that we ought to maximize the pace of technological development, but rather that we ought to maximize its safety, i.e. the probability that colonization will eventually occur.” Fanatical zeal about AI safety is a natural corollary to the longtermist conviction that hundreds of trillions of future lives are hanging in the balance. And for transhumanists like Bostrom, who believe “current humanity need not be the endpoint of evolution” and hope to bridge the divide to “beings with vastly greater capacities than present human beings have,” it’s possible that these future lives could be much more significant than the ones we’re stuck with now. While many EAs still care about humanity in its current state, MacAskill knows as well as anyone how the movement is changing. As he told an interviewer in 2022: “The Effective Altruism movement has absolutely evolved. I’ve definitely shifted in a more longtermist direction.”

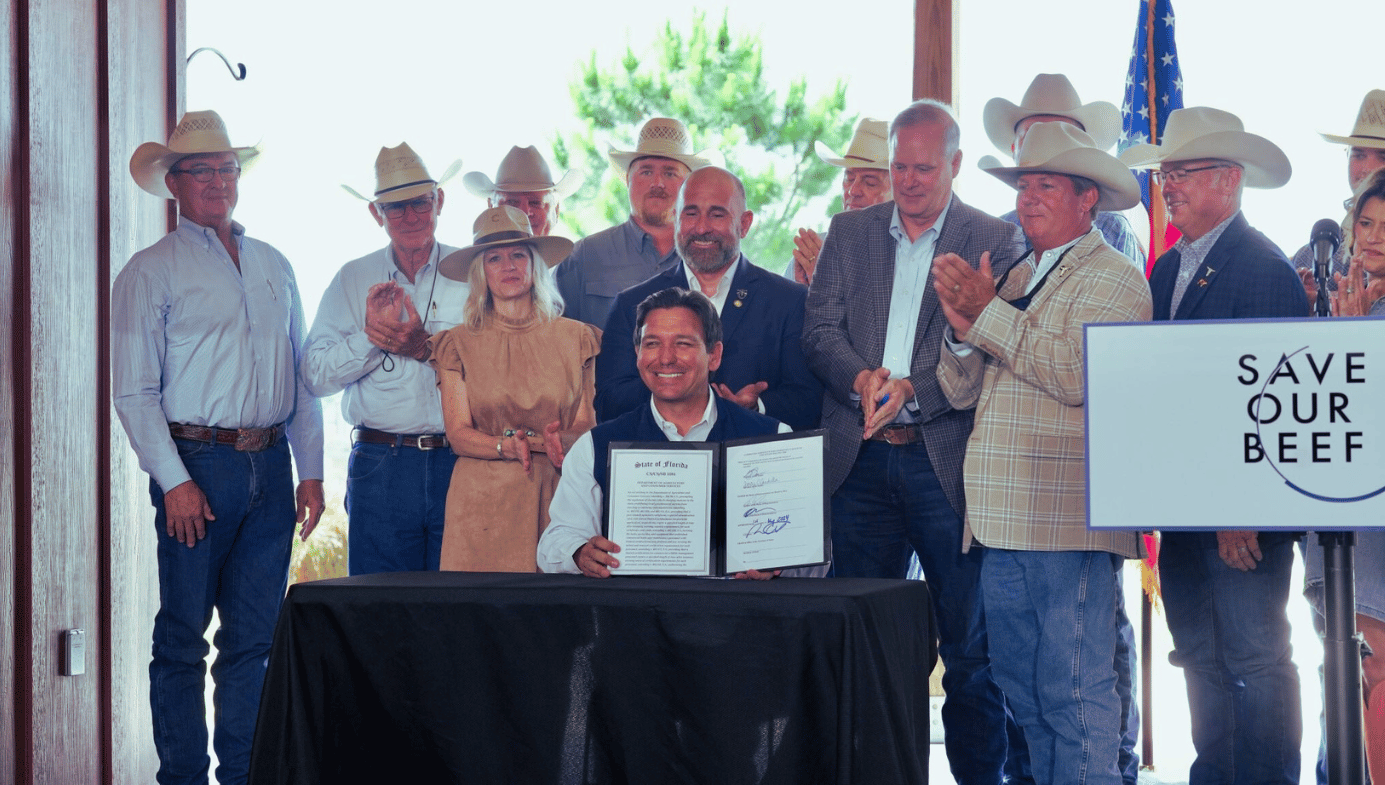

When MacAskill announced the release of his 2022 treatise on longtermism What We Owe the Future, Musk said the book is “worth reading” and a “close match for my philosophy.” Musk advises the Future of Life Institute, and he’s become one of the loudest voices warning about AI risk. His name was attached to a recent open letter published by the institute which called for a moratorium on training AI systems. When MacAskill briefly met with Musk at an Effective Altruism Global Summit in 2015, he says he “tried to talk to him [Musk] for five minutes about global poverty and got little interest.” Musk was at the summit to participate in a panel on AI. It’s likely that MacAskill will keep on encountering people who don’t share his interest in alleviating global poverty, because they’re convinced that humanity has more pressing concerns.

While MacAskill continues to oversee the evolution of EA as it marches away from humanism and toward a neurotic mix of techno-utopianism and doom-mongering, he may want to rediscover his epiphany from 2011 about the importance of good marketing. Because 2023 has been one long PR disaster for the movement.

III. Two Crises

Two of the biggest stories in tech this year took place just weeks apart: the conviction of disgraced crypto magnate Sam Bankman-Fried on charges of fraud and money laundering and the firing of OpenAI CEO Sam Altman. Altman was later reinstated as the head of OpenAI after most of the company threatened to resign over his firing, but Bankman-Fried may face decades in prison. Beyond the two CEOs named Sam at the center of these stories, there’s another connection—many people believe EA is to blame in both cases.

Although the reasons for Altman’s ouster from OpenAI still aren’t clear, many journalists and prominent figures in the field quickly identified what they believed was the trigger: hostility toward his leadership from Effective Altruists on the OpenAI board. Tasha McCauley and Helen Toner, board members who wanted to get rid of Altman, are involved with EA. Another board member who voted to fire Altman was OpenAI chief scientist Ilya Sutskever, who is concerned about AI safety (though he isn’t directly affiliated with EA). A natural conclusion is that Altman was pushed out because the EAs on the board didn’t think he was taking the threats posed by AI seriously enough.

After a Wall Street Journal article connected the chaos at OpenAI with EA, the company stated: “We are a values-driven company committed to building safe, beneficial AGI and effective altruism is not one of [those values].” Altman has described EA as an “incredibly flawed movement” which displays some “very weird emergent behavior.” Many articles have linked EA to the crisis at OpenAI, with headlines like: “The AI industry turns against its favorite philosophy” (Semafor), “Effective Altruism contributed to the fiasco at OpenAI” (Forbes), and “OpenAI’s crackup is another black eye for effective altruism” (Fortune). Even backers of EA have echoed these sentiments. Semafor reports that Skype co-founder Jaan Tallinn, a major contributor to EA causes (specifically those relating to AI risk), said the “OpenAI governance crisis highlights the fragility of voluntary EA-motivated governance schemes.”

but though i think EA is an incredibly flawed movement, i will say:

— Sam Altman (@sama) November 17, 2022

as individuals, EAs are almost always exceptionally nice, well-meaning people. the movement has some very weird emergent behavior, but i'm happy to see the self-reflection and feel confident it'll emerge better.

The precise role of EA in the turmoil at OpenAI is fuzzy. While the New York Times reported that Sutskever was “increasingly worried that OpenAI’s technology could be dangerous and that Mr. Altman was not paying enough attention to that risk,” it also noted that he “objected to what he saw as his diminished role inside the company.” Sutskever later declared, “I deeply regret my participation in the board’s actions” and signed an open letter calling for Altman’s reinstatement and the board’s resignation. In a recent interview, Helen Toner claimed that Altman wasn’t fired over concerns about his attitude toward AI safety. Twitch co-founder Emmett Shear briefly served as interim CEO of OpenAI, and he said the “board did *not* remove Sam over any specific disagreement on safety, their reasoning was completely different from that.”

Nevertheless, the anti-EA narrative has been firmly established, and critics of EA will continue to use the OpenAI scandal to bludgeon the movement. Coinbase CEO Brian Armstrong suggested that the world was witnessing an EA “coup” at OpenAI. The entrepreneur and investor Balaji Srinivasan has declared that every AI company would have to choose between EA and “e/acc” (effective accelerationism)—a direct rebuke to the “AI doomerism” that has captured the imagination of many EAs. The influence of doomerism has made EA increasingly toxic to many in the field. Even before the firing of Altman, a spokesperson for OpenAI announced, “None of our board members are Effective Altruists.” Princeton computer science professor Arvind Narayanan summarized the situation with OpenAI: “If this coup was indeed motivated by safetyism it seems to have backfired spectacularly, not just for OpenAI but more generally for the relevance of EA thinking to the future of the tech industry.”

Although it isn’t fair to pin the blame for the mess at OpenAI on EA, the movement is suffering for its infatuation with AI risk. Even many supporters of EA are worried about its trajectory: “I do fear that some parts of the movement have jumped the shark,” Steven Pinker recently told me. “AI doomerism is a bad turn—I don’t think the most effective way to benefit humanity is to pay theoreticians to fret about how we’re all going to be turned into paperclips.” This is a reference to a thought experiment originated by Bostrom, whose 2014 book Superintelligence is an essential text in the AI safety movement. Bostrom worries that a lack of AI “alignment” will lead superintelligent systems to misinterpret simple commands in disastrous and counterintuitive ways. So, when it is told to make paperclips, AI may do so by harvesting human bodies for atoms. When Pinker later reiterated his point on X, describing EA as “cultish” and lamenting its emphasis on AI risk (but reaffirming his support for the movement’s founding principles), MacAskill responded that EA is “not a package of particular views.” He continued:

You can certainly be pro-EA and sceptical of AI risk. Though AI gets a lot of attention on Twitter, it’s still the case that most money moved within broader EA goes to global health and development—over $1 billion has now gone to GiveWell-recommended charities. Anecdotally, many of the EAs I know who work on AI still donate to global health and wellbeing (including me; I currently split my donations across cause areas).

MacAskill then made a series of familiar AI risk arguments, such as the idea that the collective power of AI may become “far greater than the collective power of human beings.” EA’s connection to AI doomerism doesn’t just “get a lot of attention on Twitter”—it has become a defining feature of the movement. Consequentialists like MacAskill should recognize that radical ideas about AI and existential risk may be considered perfectly reasonable in philosophy seminars or tight-knit rationalist communities like EA, but they have political costs when they’re espoused more broadly. They can also lead people to dangerous conclusions, like the idea that we should risk nuclear war to inhibit the development of AI.

The OpenAI saga may not have been such a crisis for EA if it wasn’t for another ongoing PR disaster, which had already made 2023 a very bad year for the EA movement before Altman’s firing. When the cryptocurrency exchange FTX imploded in November 2022, the focus quickly turned to the motivations of its founder and CEO, Sam Bankman-Fried. Bankman-Fried allegedly transferred billions of dollars in customer funds to plug gaping holes in the balance sheet of his crypto trading firm Alameda Research. This resulted in the overnight destruction of his crypto empire and massive losses for many customers and investors. The fall of FTX also created a crisis of confidence in crypto more broadly, which shuttered companies across the industry and torched billions more in value. On November 2, Bankman-Fried was found guilty on seven charges, including wire fraud, conspiracy, and money laundering.

Bankman-Fried was one of the most famous EAs in the world, and critics were quick to blame the movement for his actions. “If in a decade barely anyone uses the term ‘effective altruism’ anymore,” Erik Hoel wrote after the FTX collapse, “it will be because of him.” Hoel argues that Bankman-Fried’s behavior was a natural outgrowth of a foundational philosophy within EA: act utilitarianism, which holds that the best action is the one which ultimately produces the best consequences. Perhaps Bankman-Fried was scamming customers for what he saw as the greater good. Hoel repeats the argument made by many EA critics after the FTX scandal—that any philosophy concerned with maximizing utility as broadly as possible will incline its practitioners toward ends-justify-the-means thinking.

There are several problems with this argument. When Bankman-Fried was asked (before the scandal) about the line between doing “bad even for good reasons,” he said: “The answer can’t be there is no line. Or else, you know, you could end up doing massively more damage than good.” An act utilitarian can recognize that committing fraud to make and donate money may not lead to the best consequences, as the fraud could be exposed, which destroys all future earning and donating potential. Bankman-Fried later admitted that his publicly stated ethical principles amounted to little more than a cynical PR strategy. Whether or not this is true, tarring an entire movement with the actions of a single unscrupulous member (whose true motivations are opaque and probably inconsistent) doesn’t make much sense.

In his response to the FTX crisis, MacAskill stated that prominent EAs (including himself, Ord, and Karnofsky) have explicitly argued against ends-justify-the-means reasoning. Karnofsky published a post that discussed the dangers of this sort of thinking just months before the FTX blowup, and noted that there’s significant disagreement on ultimate ends within EA:

EA is about maximizing how much good we do. What does that mean? None of us really knows. EA is about maximizing a property of the world that we’re conceptually confused about, can’t reliably define or measure, and have massive disagreements about even within EA. By default, that seems like a recipe for trouble.

This leads Karnofsky to conclude that it’s a “bad idea to embrace the core ideas of EA without limits or reservations; we as EAs need to constantly inject pluralism and moderation.” MacAskill is similarly on guard against the sort of fanaticism that could lead an EA to steal or commit other immoral actions in service of a higher purpose. As he writes in What We Owe the Future, “naive calculations that justify some harmful action because it has good consequences are, in practice, almost never correct. … It’s wrong to do harm even when doing so will bring about the best outcome.” Ord makes the same case in his 2020 book The Precipice:

When something immensely important is at stake and others are dragging their feet, people feel licensed to do whatever it takes to succeed. We must never give in to such temptation. A single person acting without integrity could stain the whole cause and damage everything we hope to achieve.

Bankman-Fried’s actions weren’t an example of EA’s founding principles at work. Many EAs were horrified by his crimes, and there are powerful consequentialist arguments against deception and theft. The biggest threat to EA doesn’t come from core principles like evidence-based giving, universalism, or even consequentialism—it comes from the tension between these principles and some of the ideas that are taking over the movement. A consequentialist should be able to see that EA’s loss of credibility could have a severe impact on the long-term viability of its projects. Prominent AI doomers are entertaining the thought of nuclear war and howling about the end of the world. Business leaders in AI—the industry EA hopes to influence—are either attacking or distancing themselves from the movement. For EAs who take consequences seriously, now is the time for reflection.

IV. Recovering the Humanist Impulse

“He told me that he never had a bed-nets phase,” the New Yorker’s Gideon Lewis-Krauss recalled from a May 2022 interview with Bankman-Fried, who “considered neartermist causes—global health and poverty—to be more emotionally driven.” Lewis-Krauss continued:

He was happy for some money to continue to flow to those priorities, but they were not his own. “The majority of donations should go to places with a longtermist mind-set,” he said, although he added that some intercessions coded as short term have important long-term implications. He paused to pay attention for a moment. “I want to be careful about being too dictatorial about it, or too prescriptive about how other people should feel. But I did feel like the longtermist argument was very compelling. I couldn’t refute it. It was clearly the right thing.”

Like many EAs, Bankman-Fried was attracted to the movement for its unsentimental cost-benefit calculations and rational approach to giving. But while these features convinced many EAs to support GiveWell-recommended charities, they led Bankman-Fried straight to longtermism. After all, why should we be inordinately concerned with the suffering of a few billion people now when the ultimate well-being of untold trillions—whose consciousness could be fused with digital systems and spread across the universe one day—is at stake?

Bankman-Fried’s dismissive and condescending term “bed-nets phase” encapsulates a significant source of tension within EA: neartermists and longtermists are interested in causes with completely different evidentiary standards. GiveWell’s recommendations are contingent on the most rigorous forms of evidence available for determining nonprofit performance, such as randomized controlled trials. But longtermists rely on vast, immeasurable assumptions (about the capacities of AI, the shape of humanity millions of years from now, and so on) to speculate about how we should behave today to maximize well-being in the distant future. Pinker has explained what’s wrong with this approach, arguing that longtermism “runs the danger of prioritizing any outlandish scenario, no matter how improbable, as long as you can visualize it having arbitrarily large effects far in the future.” Expected value calculations won’t do much good if they’re based on flawed assumptions.

Longtermists create the illusion of precision when they discuss issues like AI risk. In 2016, Karnofsky declared that there’s a “nontrivial likelihood (at least 10 percent with moderate robustness, and at least 1 percent with high robustness) that transformative AI will be developed within the next 20 years.” This would be a big deal, as Karnofsky defines “transformative AI” as “AI that precipitates a transition comparable to (or more significant than) the agricultural or industrial revolution.” Elsewhere, he admits: “I haven’t been able to share all important inputs into my thinking publicly. I recognize that our information is limited, and my take is highly debatable.” All this hedging may sound scrupulous and modest, but it also allows longtermists to claim (or to convince themselves) that they’ve done adequate due diligence and justified the longshot philanthropic investments they’re making. While Karnofsky acknowledges the risks of groupthink, overconfidence, etc., he also systematically explains these risks away as unavoidable aspects of hits-based philanthropy.

Surveys of AI experts often produce frightening results, which are routinely cited as evidence for the effectiveness of investments in AI safety. For example, a 2022 survey asked: “What probability do you put on human inability to control future advanced AI systems causing human extinction or similarly permanent and severe disempowerment of the human species?” The median answer was 10 percent, which inevitably produced a flood of alarmist headlines. And this survey was conducted before the phenomenal success of ChatGPT intensified AI safety discussions, so it’s likely that respondents would be even gloomier now. Regardless of how carefully AI researchers have formulated their thoughts, expert forecasting is an extremely weak form of evidence compared to the evidence EAs demand in other areas. Does GiveWell rely on expert surveys or high-quality studies to determine the effectiveness of programs it supports?

It’s not that longermists haven’t thought deeply about their argument—MacAskill says he worked harder on What We Owe the Future than on any other project, and there’s no reason to doubt him. But longtermism is inherently speculative. As Karnofsky admits, the keyword for hits-based philanthropists is “risk”—they’re prepared to be wrong most of the time. And for longtermists, this risk is compounded by the immense difficulty of predicting outcomes far into the future. As Pinker puts it, “If there are ten things that can happen tomorrow, and each of those things can lead to ten further outcomes, which can lead to ten further outcomes, the confidence that any particular scenario will come about should be infinitesimal.” We just don’t know what technology will look like a few years from now, much less a few million years from now. If there were longtermists in the 19th century, they would have been worried about the future implications of steam power.

Our behaviors and institutions often have a longtermist orientation by default. Many Americans and Europeans are worried about the corrosion of democratic norms in an era of nationalist authoritarianism, and strengthening democracy is a project with consequences that extend well beyond the next couple of generations. Countless NGOs, academics, and diplomats are focused on immediate threats to the long-term future of humanity such as great power conflict and the risk of nuclear war. The war in Ukraine has pushed nuclear brinkmanship to a most dangerous point than at any time since the Cold War—Russia has been pulling out of longstanding nuclear treaties and threatening the use of nuclear weapons since the beginning of the invasion. Meanwhile, Beijing is building up its nuclear arsenal as Xi Jinping continues to insist that China will take Taiwan one way or another. While longtermists support some programs that are focused on nuclear risk, their disproportionate emphasis on AI is a reflection of their connections to Silicon Valley and “rationalist” communities that take Musk’s view about the relative dangers of AI and nuclear weapons.

There has always been criticism of EA as a cold and clinical approach to doing good. Critics are especially hostile to EA concepts like “earning to give,” which suggests that the best way to maximize impact may be to earn as much as possible and donate it rather than taking a job with an NGO, becoming a social worker, or doing some other work that contributes to the public good. But the entire point of EA was to demonstrate that the world needs to think about philanthropy differently, from the taboos around questioning how people give to the acceptance of little to no accountability among nonprofits.

EAs are now treating their original project—unbiased efforts to do as much good for as many people as possible—as some kind of indulgence. In his profile of MacAskill, Lewis-Krauss writes: “E.A. lifers told me that they had been unable to bring themselves to feel as though existential risk from out-of-control A.I. presented the same kind of ‘gut punch’ as global poverty, but that they were generally ready to defer to the smart people who thought otherwise.” One EA said, “I still do the neartermist thing, personally, to keep the fire in my belly.” Many critics of EA question the movement because helping people thousands of miles away doesn’t give them enough of an emotional gut punch. They believe it’s better for the soul to keep your charitable giving local—by helping needy people right in front of you and supporting your own community, you’ll be a better citizen and neighbor. These are the comfortable forms of solidarity that never cause much controversy because they come so naturally to human beings. Don’t try to save the world, EAs are often told—it’s a fool’s errand and you’ll neglect what matters most.

But in an era of resurgent nationalism and tribalism, EA offers an inspiring humanist alternative. EAs like MacAskill and Singer taught many of us to look past our own borders and support people who may live far away, but whose lives and interests matter every bit as much as our own. It’s a tragedy that the “smart people” in EA now believe we should divert resources from desperate human beings who need our help right now to expensive and speculative efforts to fend off the AI apocalypse. EAs have always focused on neglected causes, which means there’s nobody else to do the job if they step aside.

Hopefully, there are enough EAs who haven’t yet been swayed by horror stories about AI extinction or dreams of colonizing the universe, and who still feel a gut punch when they remember that hundreds of millions of people lack the basic necessities of life. Perhaps the split isn’t between longtermists and neartermists—it’s between transhumanists who are busy building the foundation of our glorious posthuman future and humanists who recognize that the “bed-nets phase” of their movement was its finest hour.